In the modern digital landscape, where data streams relentlessly, can businesses truly thrive without mastering the art of efficient data processing? The answer, without a doubt, is no. The ability to harness and analyze vast datasets is no longer a luxury; it's the lifeblood of innovation, competitiveness, and sustainable growth.

Batch processing has transitioned from a specialized technique to a fundamental building block in today's data-driven world. It allows organizations to manage repetitive tasks with both efficiency and precision. Whether dealing with the intricate web of IoT devices, the boundless capacity of cloud computing, or the complexity of enterprise-level applications, understanding and effectively implementing remote IoT batch jobs is critical for boosting productivity and streamlining workflows.

This exploration delves into the intricacies of remote IoT batch job examples, uncovering their practical applications, highlighting their numerous advantages, and providing insights into successful implementation strategies. By understanding these principles, you'll gain the knowledge to design and execute batch processing workflows precisely tailored to your unique operational needs.

- Texas Giant Death 2024 Facts Safety Investigation

- The Dew Boys How They Conquered The Music World Amp Beyond

Understanding RemoteIoT Batch Job

Advantages of Batch Processing

Practical RemoteIoT Batch Job Example

- Unlocking Gpo Maps Your Guide To Locationbased Innovation

- Frankie Beverlys Health Battle A Story Of Resilience Hope

Tools for Efficient Batch Processing

Configuring a Batch Job

- Step 1: Identify Data Sources

- Step 2: Develop a Script

Recommended Practices for Batch Processing

Addressing Common Challenges

Real-World Use Cases

Enhancing Batch Processing Performance

Future Directions in Batch Processing

Summary

Understanding RemoteIoT Batch Job

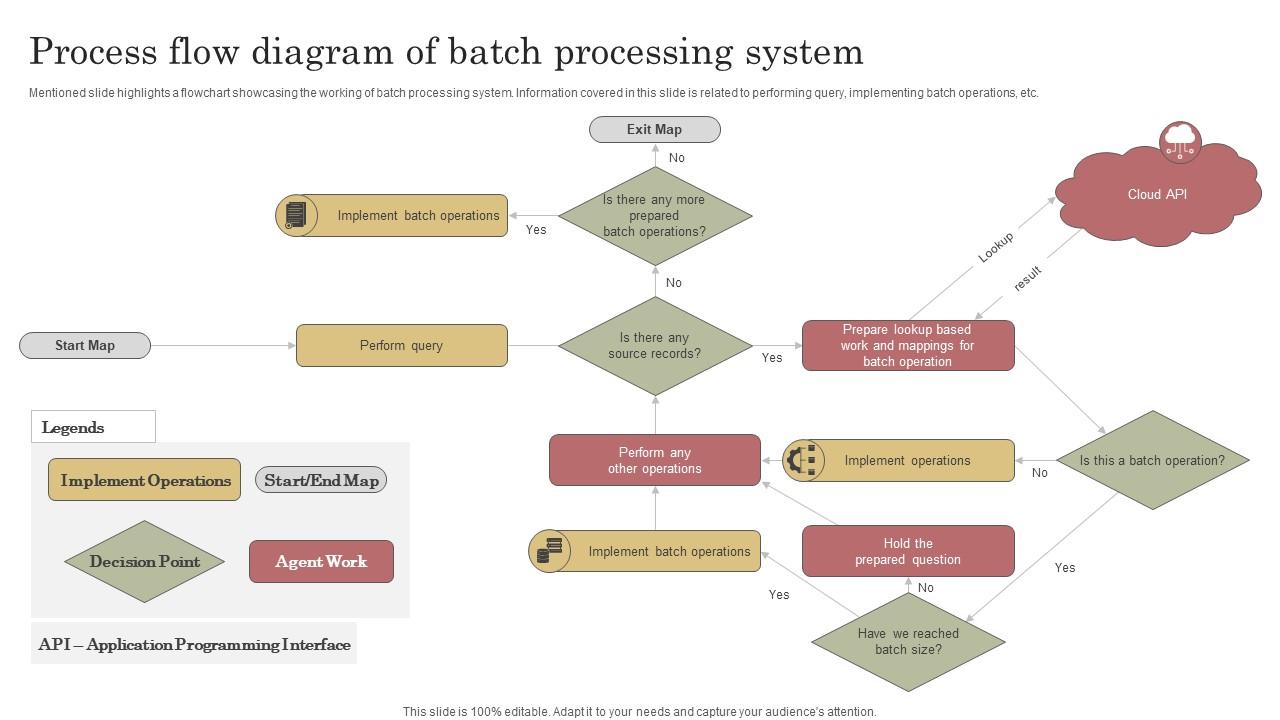

A RemoteIoT batch job represents the organized execution of a series of predefined tasks performed in bulk. This operational approach leverages IoT devices or remote systems to streamline processing, particularly when dealing with large datasets that necessitate systematic handling. Unlike real-time processing, which demands immediate responses, batch jobs are strategically scheduled to execute at specific intervals. This scheduling ensures optimal resource utilization, effectively minimizing strain on systems during peak usage periods.

Batch processing has become a prevalent method across multiple industries, including manufacturing, healthcare, finance, and telecommunications. Its precision in handling repetitive tasks renders it indispensable for organizations seeking to improve operational efficiency. By integrating remote IoT devices into the processing framework, businesses can significantly enhance their data collection and analysis capabilities, which in turn, promotes well-informed decision-making and targeted operational enhancements.

Advantages of Batch Processing

The implementation of remote IoT batch jobs offers a multitude of benefits that can considerably improve business operations. The following are key advantages:

- Cost Efficiency: The automation of repetitive tasks is a hallmark of batch processing, which significantly diminishes the need for constant human intervention. This streamlined approach leads to notable cost savings.

- Improved Accuracy: Automated processes are inherently designed to minimize the risk of human errors. This leads to results that are consistently reliable, boosting the integrity of the data.

- Scalability: Batch jobs are inherently engineered to manage large datasets without compromising performance. This makes them an ideal solution for growing businesses with escalating data processing demands.

- Resource Optimization: Scheduling jobs during off-peak hours allows organizations to maximize resource utilization. This strategic approach ensures that system resources are used at their maximum efficiency.

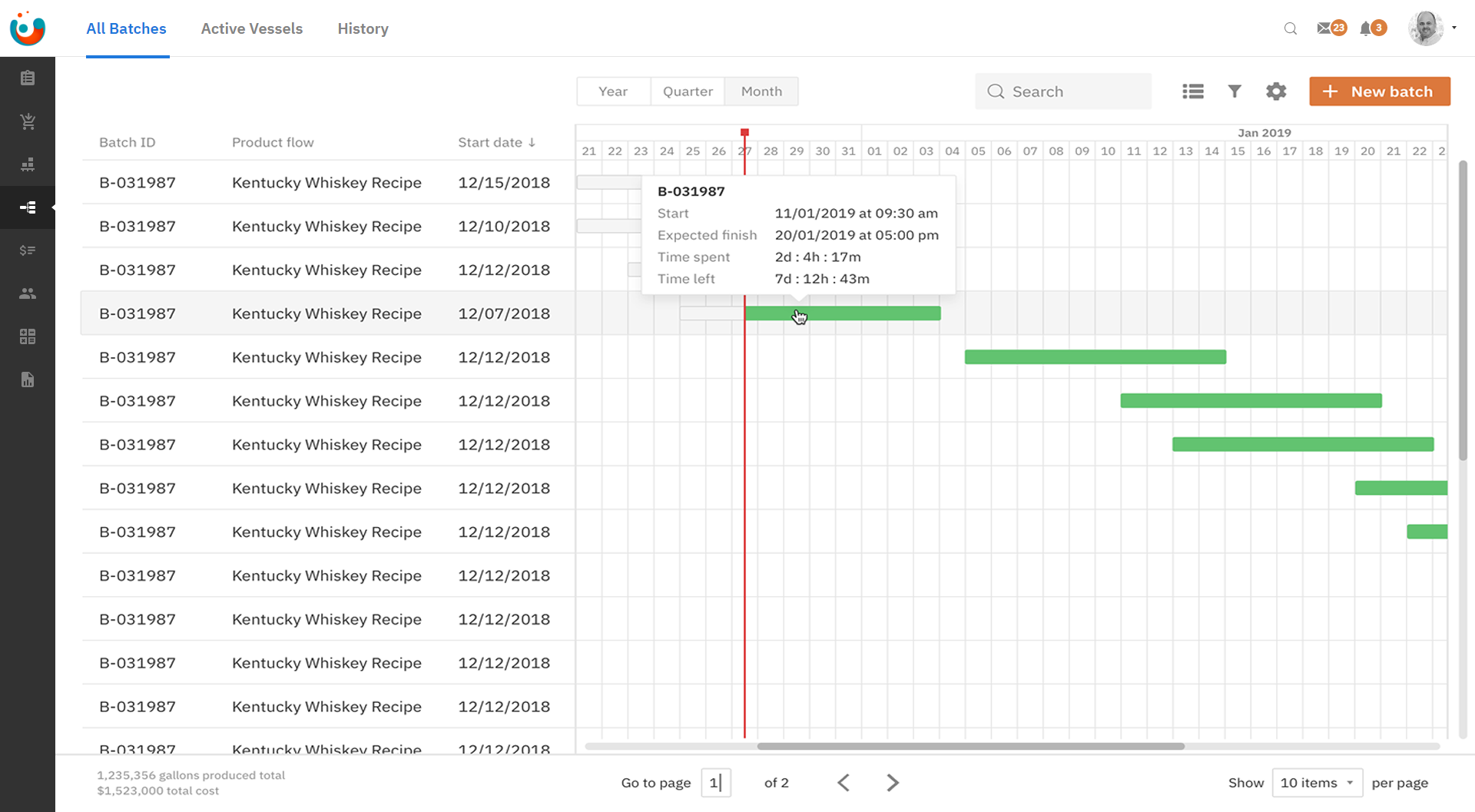

Practical RemoteIoT Batch Job Example

Consider an operational example of a remote IoT batch job within a smart agriculture system. Imagine a farm equipped with a network of sensors monitoring key parameters, such as temperature, humidity, and soil moisture. To analyze this data and produce actionable insights, a batch job could be scheduled to process the information collected over a 24-hour period.

In this specific scenario, the batch job could encompass the following procedural steps:

- Data Collection: Gathering raw data from IoT sensors installed strategically across the farm.

- Data Cleaning and Aggregation: Removing any inconsistencies and aggregating the data to ensure both accuracy and completeness.

- Data Analysis: Performing calculations to derive meaningful metrics. These could include average temperature, moisture levels, or any other relevant metric that provides valuable insights into the farm's conditions.

- Report Generation: Creating detailed reports or alerts based on the processed data, which enables farmers to make informed decisions regarding crop management and resource allocation.

Tools for Efficient Batch Processing

For the effective implementation of remote IoT batch jobs, using the right tools and technologies is crucial. Below are some popular options that can help streamline the process:

- Apache Spark: A robust engine designed for large-scale data processing, supporting both batch and real-time computations. Its versatility makes it an ideal choice for organizations handling massive datasets.

- Amazon Web Services (AWS) Batch: A fully managed service that simplifies the execution of batch computing workloads in the cloud, providing scalability and flexibility to meet varying demands.

- Microsoft Azure Batch: A platform designed for running large-scale parallel and batch computing applications in the cloud, offering seamless integration with other Azure services for a comprehensive solution.

- Google Cloud Dataflow: A fully managed service that enables the execution of data processing pipelines at scale, providing a powerful framework for handling complex data operations.

Configuring a Batch Job

The process of setting up a remote IoT batch job involves several crucial steps. Below is a detailed breakdown of the process:

Step 1

Start by identifying the IoT devices or systems that will serve as the data sources for your batch job. Ensure that these devices are correctly configured and capable of transmitting data in the required format. This step is crucial to ensure that the data collected is accurate and relevant to the tasks at hand.

Step 2

Create a script or program that outlines the specific tasks to be performed during the batch job. This script should include clear instructions for data collection, processing, and the generation of desired outputs. Programming languages such as Python or Java are highly recommended for scripting due to their extensive libraries and ease of use in data manipulation tasks.

Recommended Practices for Batch Processing

To ensure the successful implementation of remote IoT batch jobs, it is essential to adhere to best practices. Below are some key recommendations:

- Thorough Planning: Before you begin implementation, define the objectives and requirements of your batch job with meticulous precision. This process helps ensure the process is well-aligned with your overall business goals, and that it meets all established criteria.

- Performance Monitoring: Regularly monitor the performance of your batch jobs to proactively identify and address any bottlenecks or inefficiencies. This proactive approach ensures that your system consistently operates at peak performance levels.

- Documentation: Maintain detailed documentation of your batch job workflows for future reference and troubleshooting purposes. This facilitates easier updates and modifications as your operational needs evolve.

- Regular Testing: Conduct comprehensive testing to verify that your batch jobs function as intended and that they consistently produce accurate results. This step is critical for maintaining the reliability and accuracy of your data processing workflows.

Addressing Common Challenges

While batch processing offers numerous benefits, it can also present certain challenges. Below are some common issues and their corresponding solutions:

- Challenge: Data Overload - Large datasets can overwhelm systems and lead to inefficiencies. Solution: Implement data filtering and aggregation techniques to manage large datasets effectively, ensuring that only relevant data is processed.

- Challenge: Resource Constraints - Limited resources can hinder the execution of batch jobs. Solution: Optimize resource allocation by scheduling batch jobs during periods of low demand, ensuring that system resources are used efficiently.

Real-World Use Cases

Remote IoT batch jobs have numerous applications across various industries, catering to different needs and challenges. Notable examples include:

- Healthcare: Processing patient data from wearable devices to monitor health trends and predict potential issues, which enables proactive healthcare management.

- Manufacturing: Analyzing sensor data from production lines to identify inefficiencies and reduce downtime, leading to improved productivity and significant cost savings.

- Transportation: Collecting and processing data from vehicles to optimize routes and fuel consumption, enhancing operational efficiency and reducing environmental impact.

Enhancing Batch Processing Performance

To maximize the performance of your remote IoT batch jobs, consider the following strategies:

- Parallel Processing: Divide tasks into smaller chunks and process them simultaneously, significantly speeding up execution and improving overall efficiency.

- Caching: Utilize caching mechanisms to store frequently accessed data, reducing the need for repeated computations and enhancing processing speed.

- Compression: Compress large datasets to minimize storage and transmission costs, ensuring that data is handled efficiently and effectively.

Future Directions in Batch Processing

The field of batch processing is constantly evolving, spurred by advancements in technology and the escalating demand for data-driven solutions. Some emerging trends include:

- Edge Computing: Processing data closer to the source to reduce latency and improve performance, enabling real-time decision-making and faster response times.

- Artificial Intelligence: Integrating AI algorithms into batch jobs to enhance data analysis capabilities, uncovering deeper insights and driving innovation.

- Blockchain: Leveraging blockchain technology to ensure data integrity and security in batch processing workflows, providing a transparent and tamper-proof solution for managing sensitive information.

Summary

The RemoteIoT batch job example presents a robust framework for handling large-scale data processing tasks. By utilizing the power of batch processing, organizations can achieve significant improvements in efficiency, accuracy, and scalability in their operations. This guide has covered the fundamental aspects of remote IoT batch jobs, including their benefits, implementation strategies, and real-world applications.

We encourage you to use the knowledge gained from this article to design and execute your own batch processing workflows. Feel free to share your thoughts and experiences in the comments section below. Additionally, explore other articles on our site to deepen your understanding of IoT and data processing technologies.

Data sources and references:

- Apache Spark Documentation - https://spark.apache.org/documentation

- AWS Batch Documentation - https://docs.aws.amazon.com/batch/latest/userguide/

- Google Cloud Dataflow Documentation - https://cloud.google.com/dataflow/docs