Is your business struggling to keep pace with the deluge of data generated by the Internet of Things (IoT)? Then, mastering remote IoT batch job processing on Amazon Web Services (AWS) is no longer a choice but a necessity for sustainable growth and data-driven decision-making.

In an era defined by the proliferation of connected devices, businesses across industries are finding themselves awash in data. From smart factories and connected healthcare devices to precision agriculture and smart city initiatives, the IoT is creating an unprecedented volume of information. But raw data is useless without the means to process and analyze it. This is where remote IoT batch job processing, particularly on a robust platform like AWS, becomes crucial. This article dives into the intricacies of effectively managing batch jobs within the AWS ecosystem, providing a detailed guide to best practices, real-world examples, and the strategies needed to unlock the true potential of your IoT data.

| Key Concept | Details |

|---|---|

| Core Technology | Remote IoT batch job processing on AWS |

| Primary Goal | Efficiently process and analyze large volumes of IoT data in a scalable, cost-effective, and secure manner using Amazon Web Services. |

| AWS Services Utilized | AWS Batch, AWS Lambda, Amazon EC2, Amazon S3, AWS IoT Core, AWS CloudWatch, AWS X-Ray. |

| Benefits | Improved efficiency, reduced operational costs, enhanced scalability, better data insights, optimized resource utilization. |

| Target Industries | Healthcare, Manufacturing, Agriculture, Smart Cities, Logistics, Energy. |

| Data Types | Sensor data (temperature, pressure, location, etc.), machine performance metrics, patient vitals, environmental readings, operational data. |

| Key Challenges | Data volume, data velocity, data variety, security concerns, cost management, scalability requirements, ensuring data integrity. |

| Best Practices | Optimizing resource allocation, implementing auto-scaling, encrypting data, using IAM roles, monitoring job queues, regularly auditing security policies. |

The integration of IoT devices with remote cloud-based systems, often referred to as RemoteIoT, facilitates data collection, analysis, and processing from geographically dispersed locations. This technology is pivotal across a variety of industries. For instance, in agriculture, it enables real-time monitoring of crop health and environmental conditions, while in healthcare, it allows for remote patient monitoring and analysis of vital signs. Manufacturing leverages RemoteIoT for predictive maintenance, tracking machine performance and identifying potential issues before they escalate, preventing costly downtime. These are just a few examples of how this technology is reshaping operational efficiency and driving innovation.

- Dive Deeper Mastering The Diving Face Split Technique

- Unlock The Art Of Bubble Letter S A Beginners Guide

AWS offers a powerful and flexible infrastructure that simplifies the management of batch jobs. Services such as AWS Batch, Lambda, and EC2, are specifically designed to efficiently handle the massive data volumes generated by IoT devices. These services ensure that your batch jobs execute seamlessly, even under conditions of peak demand. This allows organizations to scale their data processing capabilities quickly and affordably, without the need for extensive upfront investment in infrastructure.

Batch processing, as a fundamental component of this approach, offers a multitude of advantages:

- Improved Efficiency: Batch processing optimizes resource utilization, enabling the efficient handling of extensive datasets. It allows for parallel processing and the breaking down of complex tasks into manageable chunks, leading to faster processing times.

- Reduced Operational Costs: Through optimized resource utilization, batch processing minimizes operational expenses. By automating tasks and scaling resources as needed, organizations can avoid over-provisioning and unnecessary costs.

- Enhanced Scalability: As data volumes grow, batch processing solutions offer the scalability required to accommodate the increasing demands. This is achieved through the ability to automatically scale compute resources up or down based on workload, ensuring that the system can handle any volume of data.

AWS Batch stands out as a key service, streamlining the execution of batch computing workloads within the AWS Cloud. It intelligently provisions the optimal quantity and type of compute resources, taking into account the specific requirements of each batch job. This dynamic allocation ensures that resources are used efficiently and cost-effectively. The service's ability to automatically scale resources up or down based on the number of jobs in the queue is a particularly valuable feature.

- Caseohs Net Worth How He Built A 45m Empire

- Flock Boats Your Guide To Innovation Safety Amp The Open Water

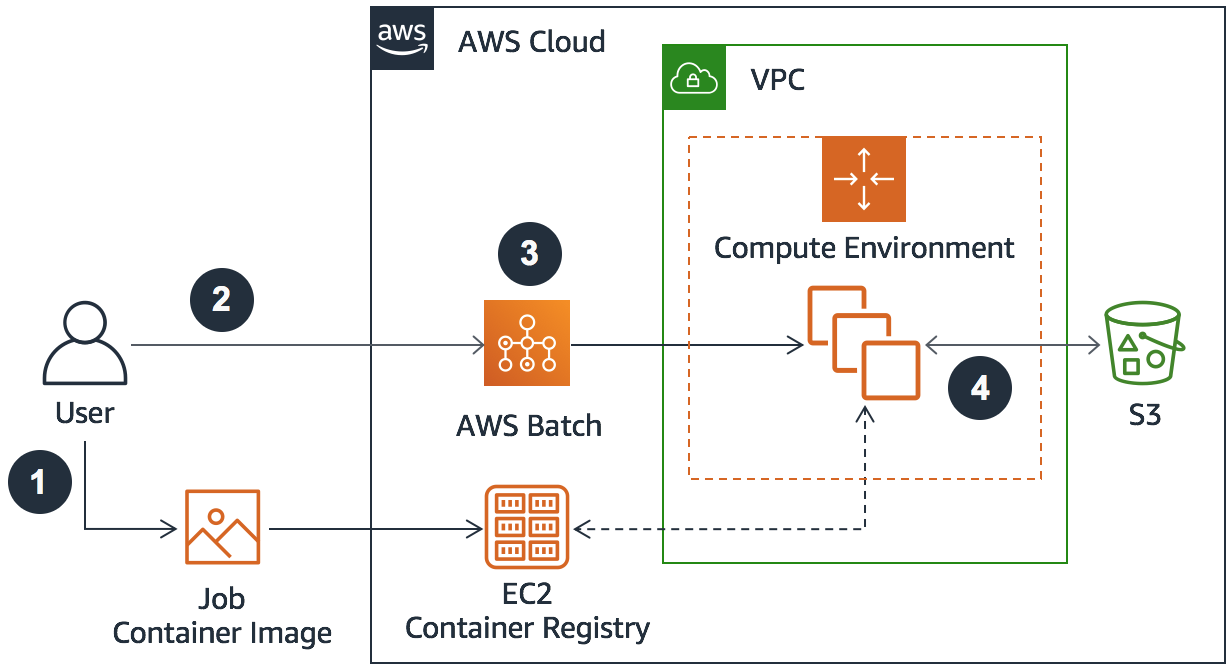

To get started with remote IoT batch jobs, setting up your AWS environment is the first step. This entails the creation of an AWS account, the configuration of IAM roles for access management, and the setup of necessary AWS services. Proper configuration of IAM roles is crucial for granting appropriate permissions. The IAM roles are created to allow AWS services to perform the jobs on your behalf. Configuring AWS Batch involves creating compute environments and job queues. Compute environments define the underlying infrastructure, and job queues manage the order of job submissions and their execution. These steps lay the foundation for successful remote IoT batch processing.

- AWS Account Creation and Access: Sign up for an AWS account on the AWS Management Console. This gives you access to the various AWS services and resources you'll need.

- IAM Role Setup: Utilize the IAM (Identity and Access Management) service to configure roles. These roles determine the permissions necessary for batch jobs. For instance, the roles allow your jobs to access data stored in S3 buckets or to interact with other AWS services.

- AWS Batch Configuration: Within the AWS Management Console, configure AWS Batch. This involves creating compute environments and job queues. A compute environment specifies the type of computing resources, while a job queue manages the order and execution of submitted jobs.

Data generated by IoT devices is typically unstructured and requires preprocessing before analysis. Batch jobs are crucial in this process, transforming raw data into usable formats. This may involve cleaning, aggregating, or transforming the data to make it suitable for analysis. The structured data allows for the extraction of meaningful insights. To process IoT data effectively in batch jobs, follow these steps:

- Data Collection: Begin by gathering data from IoT devices using AWS IoT Core, which serves as a central hub for device connections and data ingestion. This includes the secure communication of data from devices to the cloud.

- Data Storage: Store the collected data in S3 buckets, a highly scalable and cost-effective object storage service. S3 offers robust data storage capabilities, which can hold massive volumes of data, ensuring data availability and durability.

- Data Transformation and Processing: Use AWS Batch to execute data transformation scripts, such as those written in Python or other programming languages. These scripts process the data stored in S3, transforming it into usable formats, and preparing it for analysis.

To ensure the successful execution of remote IoT batch jobs, adopting best practices is critical. These practices help improve performance, lower costs, and enhance security.

Efficient resource allocation, using techniques like spot instances to reduce costs, is essential. Spot instances allow you to bid on unused EC2 instances, potentially saving significant amounts of money compared to on-demand instances. Monitoring job queues is another best practice for identifying bottlenecks and ensuring optimal performance. Scaling resources dynamically based on the workload allows your system to handle peak demands without overspending. This ensures that your system can adjust to changing loads, leading to better resource utilization and cost savings.

As the IoT ecosystem expands, so does the volume of data that needs processing. Scaling your batch jobs is essential to maintain performance and accommodate increasing workloads. Implementing auto-scaling policies for compute resources allows AWS to automatically adjust the capacity based on the demand. Using CloudWatch to monitor metrics and trigger scaling actions is another key strategy. CloudWatch provides real-time monitoring capabilities, enabling you to set thresholds and automatically scale resources based on performance metrics. Optimizing job definitions to improve resource utilization ensures that your jobs run as efficiently as possible. Tuning parameters such as memory allocation, and parallelism can optimize job performance.

Monitoring is essential for identifying any issues and ensuring that your batch jobs run smoothly. AWS provides several tools to help you monitor and optimize your batch jobs effectively. AWS CloudWatch provides real-time monitoring of job metrics, allowing you to track key performance indicators (KPIs) such as job completion times and resource utilization. The AWS Batch Console offers a user-friendly interface for managing your batch jobs, including viewing job status, logs, and performance metrics. AWS X-Ray is a service that helps trace and debug batch job performance issues. By using X-Ray, you can identify bottlenecks in your application and optimize job performance.

Security is paramount when dealing with sensitive IoT data. Implement robust security measures to protect your data and comply with regulatory standards. Encrypt data both in transit and at rest to protect it from unauthorized access. Use IAM roles with the least privilege access to minimize the potential impact of a security breach. Regularly audit and update security policies to ensure they remain effective in the face of evolving threats.

The healthcare industry benefits from remote IoT batch processing, as IoT devices monitor patient vitals and send data to the cloud for analysis. Batch jobs process this data to generate insights that help improve patient care. In manufacturing, IoT sensors track machine performance. Batch jobs analyze this data to predict maintenance needs and optimize production schedules. This data-driven approach helps manufacturers reduce downtime and increase efficiency.

RemoteIoT batch job processing on AWS provides a robust solution for handling the massive data volumes generated by IoT devices. By implementing the best practices outlined in this guide, you can ensure efficient, secure, and scalable batch job execution. AWS provides the necessary tools and services to handle all aspects of remote IoT data processing, enabling organizations to maximize the value of their IoT data.

- Bop On Tiktok Meaning How To Create Viral Content

- American Dad Rule 34 Unpacking The Internets Paradox